At Scalyr, we’ve optimized our pipeline from data ingestion to query execution so our customers can search logs at TBs per second. In this post, we’ll discuss a specific part of that pipeline: regular expression (regex) parsing during Bloom filter creation. Read on to learn how we captured the huge query latency reduction enabled by Bloom filters with a custom-built regex parser, and how much speed we gained as a result.

A little background

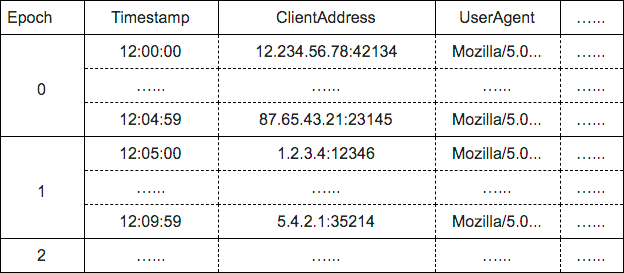

Scalyr organizes incoming log data in a columnar format: each text line is split into multiple “columns” that can be queried independently. We also partition the data into multiple “epochs,” where each epoch represents a fixed time range. For example, a typical HTTP access log in our database is organized like this:

The above structure enables us to quickly locate the column and epoch to execute the query. For example, if someone searches for “ClientAddress contains ‘2.34.56.7’” we simply run the string comparison against the “ClientAddress” column on the appropriate epochs. Of course, this means we must linearly scan this column across all epochs. Our architecture is designed for scans—which allows arbitrary queries from our customers—but we also have a few optimizations that let us significantly speed up common query patterns.

Bloom filters for speed

To optimize queries like the one above, we build Bloom filters to summarize the contents of individual columns (and BTW, we don’t do indexing). Let’s consider our example above, when a customer searches for “2.34.56.7” in the ClientAddress column. By defining a Bloom filter of all IP addresses in that column, we can skip scanning the actual data if “2.34.56.7” is not in the Bloom filter.

We don’t define Bloom filters for every possible query term—instead, we reserve this optimization for query terms that our customers use frequently. While the exact query terms and patterns vary by customer, certain types of strings (IP addresses, customer IDs, account IDs) figure often in their queries, and we can use Bloom filters to help optimize those. Each customer typically has fewer than 10 common patterns. We can add those matched patterns to one or more of the Bloom filters we create when we “freeze” the epoch—meaning that we convert the temporary representation used to hold incoming log data into a much more query-efficient form that is written to disk. We freeze the epochs only after we are confident all their data has been received.

Let’s use some real-life numbers to see the time and computing resources we can save with our example query. We generate a new epoch per account per shard every 5 minutes, and a single epoch on a shard can be as large as 2 Gb (gigabits) (uncompressed). If a user queries “ClientAddress contains ‘2.34.56.7’” with a date range of 24 hours, then we need to read 288 epochs to search for that IP address. If each epoch averages 1.4 Gb (72 Mb compressed), that’s 20 Gb to be loaded from disk. Assuming a 1 Gbps read speed, that’s 20 seconds to just fetch the data, not even including the actual search time! But if we first fetch the Bloom filters for each epoch (which average 1 Mb compressed), and then fetch only the epochs that have hits, we end up reading only 2.9 Gb (288*1 Mb + 288*72 Mb / 8), assuming a one-eighth Bloom filter hit rate. That’s an 85% savings in latency, dropping from 20 seconds to 2.9. We’re focusing on the Bloom filter here, but there are many more tweaks to handle other cases.

The problem

This all sounded great (at least we thought so) until we noticed that our freezing process was taking longer than it was supposed to. At least twice as long, in fact. Some of the slowness came from the creation of the Bloom filter itself. Specifically, it was from the regex matching of common patterns against each log line. (For example, we used [0-9]\\.[0-9]{1,3}\\.[0-9]{1,3}\\.[0-9] to match IPv4 addresses.)

This kind of high-volume operation (at least once per log line) needs to be as fast as possible, otherwise, we can’t ingest customers’ logs in realtime at large scales. Even though we’re using our own version of the regex library, which mainly focuses on speed (we’ll discuss this in an upcoming post), it’s just not fast enough for our needs. Now what?

Hand-coded parsers!

Regex libraries tend to convert the given pattern into a finite state machine (FSM), which is great. But when it comes down to absolute fast matching for known patterns, you can’t beat a hand-coded FSM.

Here’s the code snippet of our IPv4 parser, which is essentially an optimized finite state machine.

boolean find(CharSequence text) {

// [0-9]\\.[0-9]{1,3}\\.[0-9]{1,3}\\.[0-9] => the original regex

// 0000 1 2 333 4 555 6 7 0000 => value of "state" in each position when processing string like "aaa 1.234.567.8 bbb"

int state = 0, len = 0;

for (int i = 0; i < text.length(); i++) {

char c = text.charAt(i);

if (c == '.') {

switch (state) {

case 1:

case 3:

case 5: state++; break;

case 2:

case 4:

case 6: state = 0; break;

}

} else if (c >= '0' && c <= '9') {

switch (state) {

case 0:

case 1: state = 1; break;

case 2:

case 4: state++; len = 1; break;

case 3:

case 5: if (len == 3) state = 1; else len++; break;

case 6: return true;

}

} else {

state = 0;

}

}

return false;

}

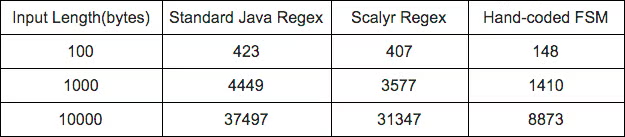

Now, it’s time for benchmarking (drumroll please). We ran three different regex matches (standard Java regex library, Scalyr regex library, and the hand-coded FSM), against the same inputs and recorded find() time in nanoseconds below.

Our own FSM proved to be 3–4 times faster! Thanks to these hand-coded FSMs, we’ve seen a substantial improvement in our ingestion pipeline, which brings more speed for our customers,

After all, that is what Scalyr is all about.