Welcome back to the re-launched PinnacleOne Executive Brief. Intended for corporate executives and senior leadership in risk, strategy, and security roles, the P1 ExecBrief provides actionable insights on critical developments spanning geopolitics, cybersecurity, strategic technology, and related policy dynamics.

For our second post, we summarize SentinelOne’s response to the National Institute of Standards and Technology (NIST) Request for Information on its responsibilities under the Executive Order on Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence issued on October 30, 2023.

Please subscribe to read future issues and forward this newsletter to your colleagues to get them to sign up as well.

Feel free to contact us directly with any comments or questions: [email protected]

Insight Focus | Safe, Secure, and Trustworthy AI

Artificial Intelligence permeates conversations in all sectors, offering promises innovators cannot resist and perils that have analysts nervous. SentinelOne has observed firsthand how both attackers and network defenders are increasingly using AI to improve their respective cyber capabilities.

In October, the White House issued an Executive Order to exert federal leadership on ensuring responsible AI use. The EO tasked NIST with creating AI evaluation capabilities and development guidelines. SentinelOne submitted a response to their Request For Information informed by our own expertise and experience using AI to secure our client’s systems.

Summary

In our response, we provided our assessment of the impact of emerging AI technologies on cybersecurity for both offensive and defensive purposes. While current effects are nascent, we expect these technologies to become increasingly used by both malign actors and network defenders – effectively we are entering the era of AI vs. AI.

It is critical that federal policy enable rather than hamstring research and development efforts that help American firms and government agencies keep pace with rapidly moving threats. We describe one example of AI-enabled cybersecurity technology, Purple AI, that we developed to drive industry innovation and stay ahead of the risk curve.

We then provided our observations of AI risk management approaches across the client industries we serve. Here we summarize three different stances being adopted by firms with various risk tolerances, market incentives, and industry considerations. We also provide a summary of the analytic framework we developed to advise firms on establishing and maintaining enterprise-wide AI risk management processes and tools.

Finally, we offered three specific recommendations that encourage NIST to emulate its successful approach with the Cybersecurity Framework. Our recommendations emphasize the importance and value of common ground truths and lexicon, industry-specific framework profiles, and a focus on voluntary, risk-based guidance.

Impact of AI on Cybersecurity

AI systems are force enablers for both attackers and defenders. SentinelOne assesses that threat actors, including state and non-state groups, are using AI to augment existing tactics, techniques, and procedures (TTPs) and improve their offensive effectiveness. We also see the rapid emergence of industry technologies that leverage AI to automate detection and response and improve defensive capabilities, including our own Purple AI.

Cyber Attacker Use of AI: Raising the Floor

Given the persistent fallibility of humans, social engineering has become a component of most cyber attacks. Even before ChatGPT, attackers used generative AI to win over the trust of unsuspecting victims. In 2019, attackers spoofed the voice of a CEO using AI voice technology to scam another higher-up out of $243,000, and by 2022 two thirds of cybersecurity professionals reported that deepfakes were a component of attacks they had investigated the previous year. The proliferation of these technologies will enable lesser skilled, opportunistic hackers to conduct advanced social engineering attacks that combine text, voice, and video to increase the scale and frequency of access operations.

Meanwhile, highly capable state threat actors are best placed to fully leverage the AI frontier for advanced cyber operations, but these effects will remain hard to discern and attribute. The UK’s National Cyber Security Centre predicts that AI may assist with malware and exploit development, but that in the near term, human expertise will continue to drive this innovation.

It is important to note that many uses of AI for vulnerability detection and exploitation may not have any indicators of AI’s role in enabling the attack.

Cyber Defender Use of AI: Increase the Signal, Reduce the Noise

SentinelOne has learned lessons in developing our own AI-enabled cyber defense capabilities. Increasingly, we recognize that analysts can get overwhelmed by alert fatigue, forcing responders to spend valuable time synthesizing complex and ambiguous information. Security problems are becoming data problems. Given this, we designed our Purple AI system to take in large quantities of data and use a generative model to use natural language inputs, rather than code, to help human analysts accelerate threat-hunting, analysis and response.

By combining the power of AI for data analytics and conversational chats, an analyst could use a prompt such as “Is my environment infected with SmoothOperator?”, or “Do I have any indicators of SmoothOperator on my endpoints?” to hunt for a specific named threat. In response, these tools will deliver results along with context-aware insights based on the observed behavior and identified anomalies within the returned data. Suggested follow up questions and best next actions are also provided. With a single click of a button the analyst can then trigger one or multiple actions, while continuing the conversation and analysis.

Purple AI is an example of how the cybersecurity industry is integrating generative AI into solutions that allow defenders, like threat hunters and SOC team analysts, to leverage the power of large language models to identify and respond to attacks faster and easier.

Using natural language conversational prompts and responses, even less-experienced or under-resourced security teams can rapidly expose suspicious and malicious behaviors that hitherto were only possible to discover with highly-trained analysts dedicating many hours of effort. These tools will allow threat hunters to ask questions about specific, known threats and get fast answers without needing to create manual queries around indicators of compromise.

How PinnaceOne Advises Firms on AI Risk Management

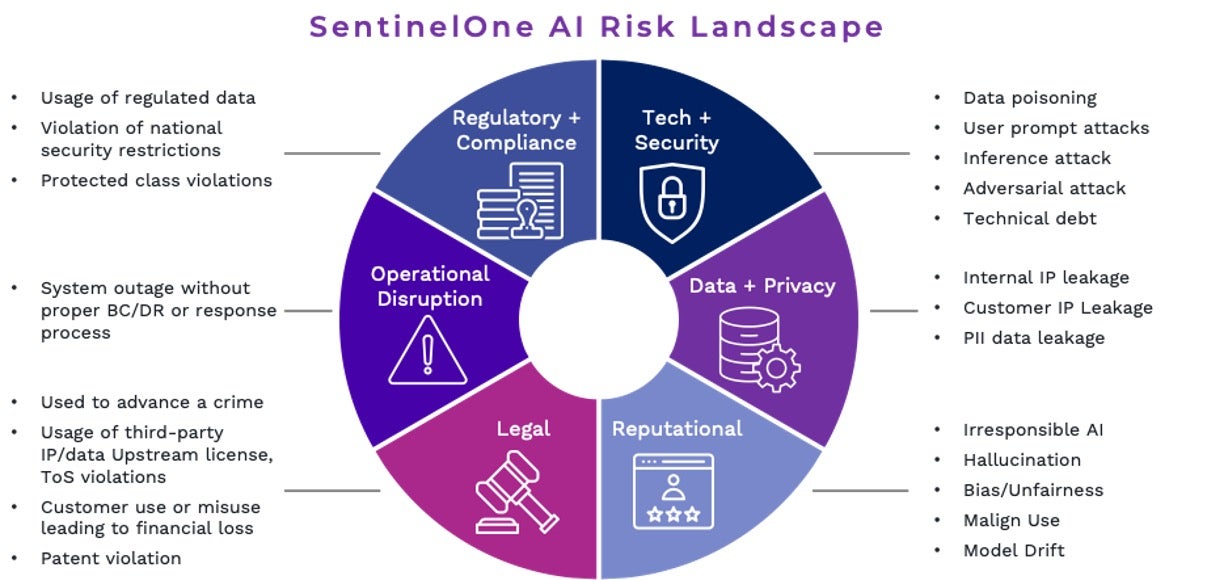

We tell firms to focus on six areas of AI risk management, with specific considerations for each:

| (1) Regulatory & Compliance | (4) Reputational |

| (2) Technology & Security | (5) Legal |

| (3) Data & Privacy | (6) Operational Disruption |

We have found that those organizations that already have effective cross-functional teams to coordinate infosec, legal, and enterprise technology responsibilities are well positioned to manage AI integration. An area of common challenge, however, is the relationship between AI development engineers, product managers, trust & safety, and infosec teams. In many firms, for example, it is not clear who owns model poisoning/injection attacks, prompt abuse, or corporate data controls, among other emerging AI security challenges. Further, the shifting landscape of third-party platform integrations, open-source proliferation, and DIY capability sets hinder technology planning, security roadmaps, and budgets

AI Safety and Security

While specific tools and technical processes will differ across industry and use-case, we observe common approaches to AI safety and security taking shape. These approaches combine traditional cybersecurity red teaming methodologies with evolving AI-specific techniques that ensure system outputs and performance are trustworthy, safe, and secure.

AI security and safety assurance involves a much broader approach than conventional information security practices (e.g., penetration testing) and must incorporate assessments of model fairness/bias, harmful content, and misuse. Any AI risk mitigation framework should encourage firms to deploy an integrated suite of tools and processes that address:

- Cybersecurity (e.g., compromised confidentiality, integrity, or accessibility);

- Model security (e.g., poisoning, evasion, inference, and extraction attacks); and

- Ethical practice (e.g., bias, misuse, harmful content, and social impact).

This requires security practices that emulate not only malicious threat actors but also how normal users may unintentionally trigger problematic outputs or leak sensitive data. To do this effectively, an AI safety and security team requires a mix of cybersecurity practitioners, AI/ML engineers, and policy/legal experts to ensure compliance and user trust.

There will be a need for specific practices and tools for specialized use-cases. For example, the production of synthetic media may require embedded digital watermarking to demonstrate provable provenance and traceability of training data to avoid copyright liability.

Also, as AI agents become more powerful and prevalent, a much larger set of legal, ethical, and security considerations will be raised regarding what controls are in place to govern the behavior of such agents and constrain their ability to take independent action in the real world (e.g., access cloud computing resources, make financial transactions, register as a business, impersonate a human, etc.).

Further, the implications for geopolitical competition and national security will become increasingly important as great powers race to capture strategic advantage. Working at the frontier of these technologies will involve inherent risk and U.S. adversaries may accept a higher risk tolerance in order to leap ahead. International standard setting and trust-building measures will be necessary to prevent a race-to-the-bottom competitive dynamic and security spiral.

To manage and mitigate these risks, we will need common and broad guardrails but also specific best practices and security tools calibrated to different industries. These should be based on the nature of the use-case, operational scope, scale of potential externalities, effectiveness of controls, and take into account a cost-benefit balance between innovation and risk. Given the pace of change, maintaining this balance will be an ever evolving effort.

Policy Recommendations

Our recommendations to NIST emphasize the importance and value of common ground truths and lexicon, industry-specific framework profiles, and a focus on voluntary, risk-based guidance.

- NIST should align and cross-walk the Cyber Security Framework (CSF) and AI Risk Management Framework to ensure a set of common ground truths. The firms we advise often look towards industry practices at competing firms to model their cybersecurity. Having a common set of terms and standards will enable companies to understand and strive towards industry benchmarks that both set the floor and raise the ceiling across diverse sectors.

- NIST should follow the CSF model and develop framework profiles for various sectors and subsectors, including profiles that range from small and medium-sized businesses to large enterprises. These Framework Profiles would help organizations in these diverse sectors align their organizational requirements and objectives, risk appetite, and resources against the desired outcomes of the RMF and its core components.

- In keeping with the successful CSF approach, NIST should seek to maximize voluntary adoption of its guidance that addresses the societal impacts and negative externalities from AI that pose the greatest risk – prescriptive regulation is a domain best suited for congressional and executive action, given the larger national security and economic considerations at issue.

We appreciated the opportunity to share with NIST our perspective on both AI risk and opportunity. We encourage our industry peers to maintain close policy engagement to ensure the U.S. keeps its innovative edge in AI-enabled cybersecurity to stay ahead of increasingly capable and malign threats.