For a long time, it was believed that keyword indexing is required to create a scalable log data store. In fact, keyword indexing is an older method for creating a searchable data store and is quickly “running out of gas” as operational data sources and volume increase.

Keyword indexing techniques were developedand applied to search within documents or searching within or for websites. Indexing on keywords works well for static textual data, where you are looking for a top ten list, ranking pages or looking for language-based relevance. For example, indexing is a great way to see how many pages list a specific URL.

Keywords are used to map to human language, where the potential pool of words is limited and relatively small. In fact, most indexing is designed for at best a few thousand terms, used repeatedly.

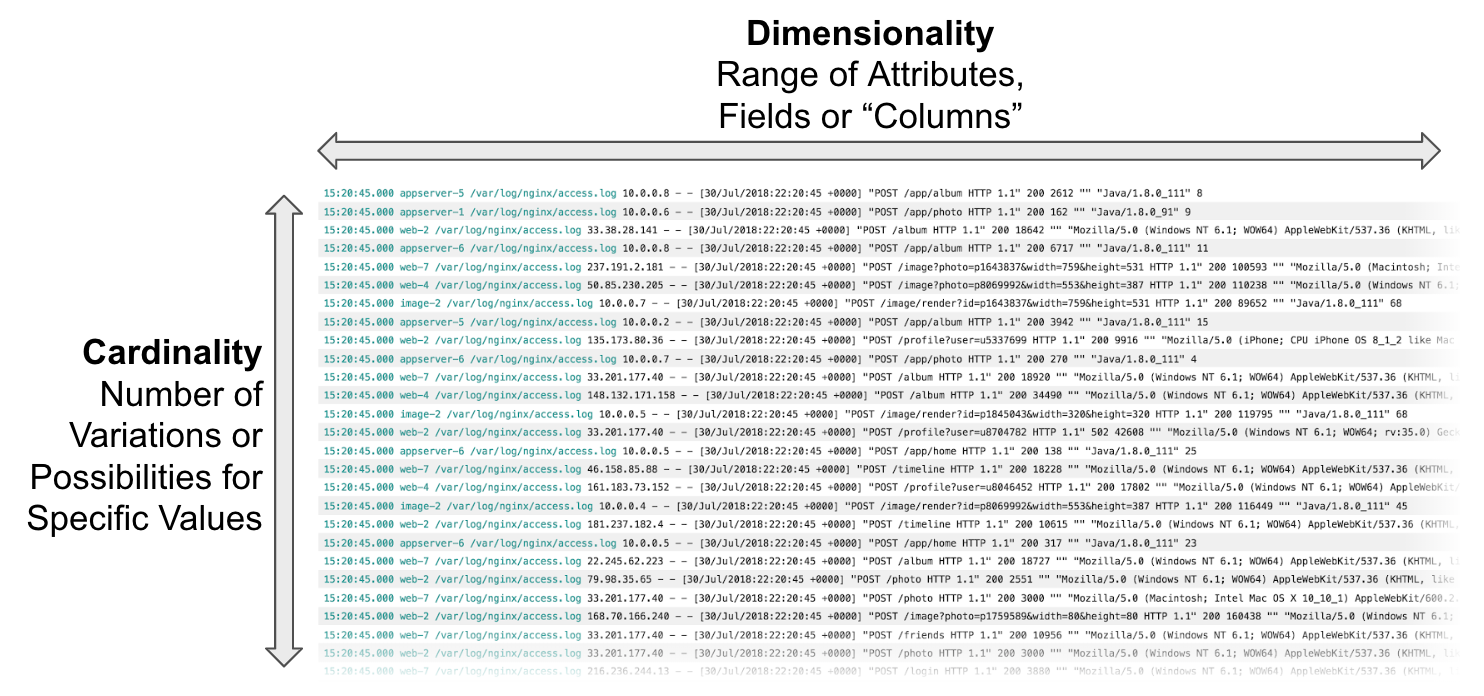

However, log data is nothing like this – and that’s why keyword indexing struggles as the amount of log data increases. In log files, the range of possible values can be orders of magnitude greater, including highly diverse values such as floating-point numbers. So log data has two common attributes that are not well-served by keyword indexes. Those attributes are “High-Cardinality” and “High-Dimensionality”.

Cardinality

In the context of databases, cardinality refers to the uniqueness of data values contained in a column. Low cardinality means that the column contains a lot of repetition in its data range. It is not common, but cardinality also sometimes refers to the relationships between tables. When trying to apply indexing to data with high cardinality, the index data itself can grow to be larger than the original dataset itself. Obviously, indexing becomes inefficient for high cardinality data.

Dimensionality

The concept of dimension also comes from databases and refers to category data. In logging, those could be attributes of a log entry such as time, id, values, durations, rates, network addresses, state information, errors, etc. Generally, dimensions are used to group quantitative data into useful categories and map those into columns. The number of attributes that comprise dimensionality of a log data set also have the effect of multiplying the size and complexity of the index.

Managing the Growing Index

In cases where an index is used as the mechanism to search log data, just the task of managing and supporting the index can outweigh the effort needed to resolve problems and improve the service being monitored.

We at Scalyr have a number of customers who had previously and unintentionally arrived at this situation, putting them “in between a rock and a hard place”. The index-based solutions became unwieldy and resulted in problems such as:

- Indexing log files can result in needing to store five to ten times the volume of the original data

- Transaction IDs are not keyword indexing approachable since each must be unique by definition

- “Auto-resolving queries”, the industry’s new euphemism for queries that time-out or fail

- Slowing ingest rates

- The need to re-start or re-index when changes are made

- Expensive storage and compute infrastructure are required to run the indexing tools

The bottom line, all of these add up to increased costs.

A Better Approach to Logging

There is a better way to create a searchable, efficient and cost-effective operational data store that avoids the burdens of indexing. In our next blog we will describe the basics of how Scalyr’s approach works.

*https://www.google.com/url?q=http://www.historyofinformation.com/detail.php?id%3D2065&sa=D&ust=1574717785036000&usg=AFQjCNHJhP2w3W4_nNxW8-mmqS4A3TdDBg