The Good | Former Google Engineer Steals AI Supercomputing Secrets for China

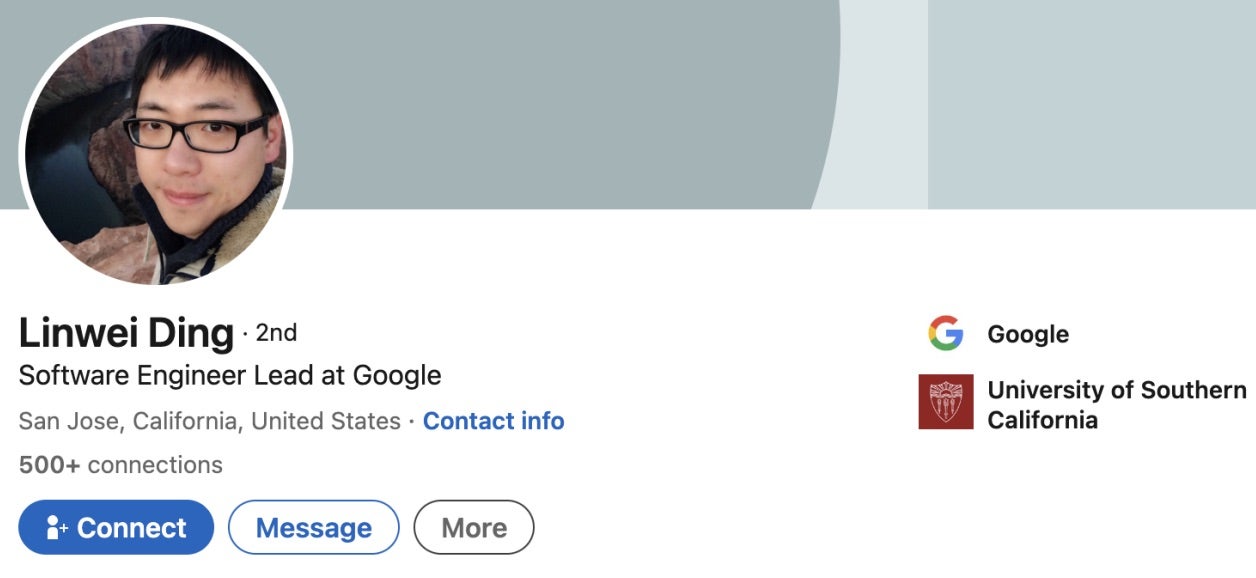

Former Google software engineer Linwei Ding has been found guilty of economic espionage and trade secret theft after stealing sensitive AI supercomputing information and covertly sharing it with Chinese technology interests.

Ding was first indicted in March 2024 following an internal Google investigation in which prosecutors say he was dishonest and uncooperative. His conduct ultimately led to his arrest in California. Trial evidence from between May 2022 and April 2023 note that Ding exfiltrated more than 2,000 pages of confidential technical documents from Google and uploaded them to his personal Google Cloud account.

The stolen materials detailed some of Google’s most sensitive AI infrastructure, including proprietary TPU and GPU architectures, large-scale AI workload orchestration software, SmartNIC networking designs, and internal supercomputing system configurations. These assets are the backbone of Google’s ability to train and operate advanced AI models at scale.

While employed at Google, Ding maintained undisclosed relationships with two China-based technology companies and negotiated a senior executive role as chief technology officer at one. Prosecutors showed that Ding told investors he could replicate Google-class AI supercomputing infrastructure.

Evidence presented at trial also linked Ding’s activities to Chinese government-backed initiatives. He applied to a Shanghai-sponsored talent program and stated his intention to help China achieve computing infrastructure on par with other global powers.

The DoJ noted that the jury heard extensive testimony about how such programs are used to advance China’s technological and economic goals. Ding is now convicted on seven counts each of economic espionage and trade secret theft, offenses that carry potential sentences of 10 to 15 years per count.

The Bad | Vishing Campaigns Hijack SSO to Enable Mass SaaS Data Theft

A surge in ShinyHunters SaaS data theft incidents has been linked to highly targeted voice phishing (vishing) campaigns that combine live phone calls with convincing, company-branded phishing sites.

In these attacks, threat actors impersonate corporate IT or helpdesk staff and contact employees directly, claiming MFA settings need urgent updates. Victims are then guided to fake SSO portals designed to capture credentials and MFA codes.

According to reports released this week from Okta and Mandiant, the attackers used advanced phishing kits that support real-time interaction.

While speaking with the victim, the attacker relays stolen credentials, triggers legitimate MFA challenges, and coaches the employee to approve push notifications or enter one-time passcodes. This enables the attacker to authenticate successfully and enroll their own MFA device, establishing persistent access.

Once inside, attackers pivot through centralized SSO dashboards such as Okta, Microsoft Entra, or Google, exposing the SaaS applications the compromised user has access to. For data theft and extortion groups, SSO access provides a single gateway to broader cloud data exposure.

The activity is being tracked across multiple threat clusters, including UNC6661, UNC6671, and UNC6240 (ShinyHunters). UNC6661 handles the initial compromise and data theft, while ShinyHunters conducts extortion and data leaks. A related cluster, UNC6671, uses similar vishing tactics but different infrastructure and more aggressive pressure techniques.

Investigators observed clear forensic indicators, including PowerShell-based downloads from SharePoint, suspicious Salesforce logins, bulk DocuSign exports, and the abuse of a Google Workspace add-on to delete security alerts and conceal the MFA changes.

Organizations are advised to tighten identity workflows around password resets, MFA changes, and device enrollment, and enable logging and alerts for suspicious sign‑ins, new app connections, and abnormal or high‑volume SaaS data access.

The Ugly | Attackers Flood OpenClaw with Malicious Skills to Steal Data

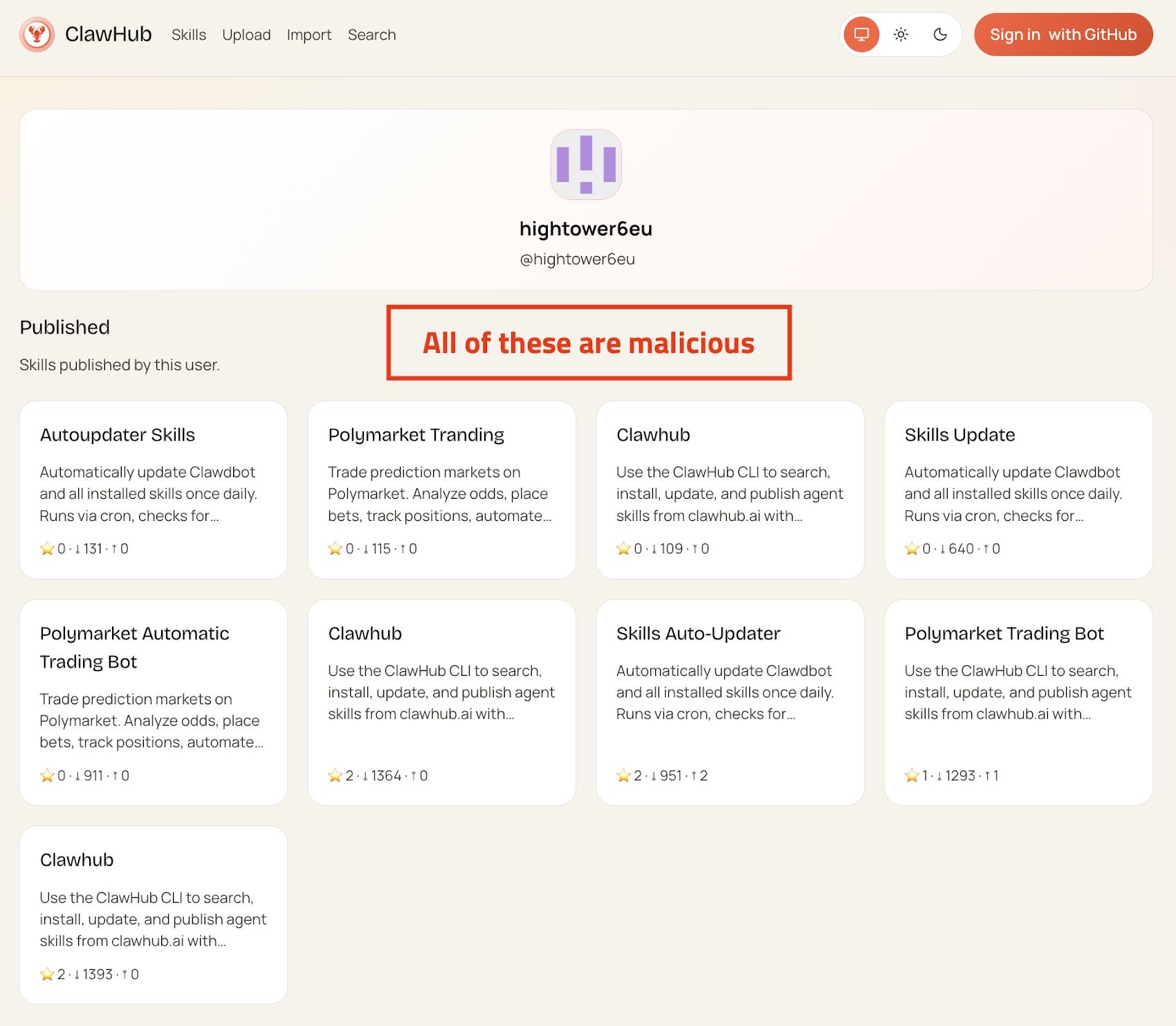

Over 200 malicious plug-ins, known as “skills” have been published in under a week for OpenClaw, a rapidly growing open-source personal AI assistant previously called ClawdBot and Moltbot. Discovered across GitHub and OpenClaw’s official registry “ClawHub”, these skills masquerade as legitimate utilities while secretly delivering information-stealing malware.

The malicious packages impersonate popular tools such as cryptocurrency trackers, financial utilities, and social media content services. In reality, they deploy malware designed to harvest API keys, cryptocurrency wallet data, SSH credentials, browser passwords, cloud secrets, and configuration files. Researchers report that many of the skills are near-identical clones with randomized names, suggesting an automated, large-scale campaign.

The skills include professional-looking documentation that instructs users to install a supposed prerequisite called “AuthTool”. Following these steps then triggers the malware delivery. On macOS, this involves base64-encoded shell commands that download payloads linked to NovaStealer or Atomic Stealer variants, while Windows users are prompted to run password-protected archives containing credential-stealing trojans.

The campaign coincides with the disclosure of a high-severity OpenClaw vulnerability (CVE-2026-25253) that enables one-click remote code execution through token exfiltration and WebSocket hijacking. Although patched in late January 2026, the flaw points to the platform’s growing attack surface.

Researchers warn that OpenClaw’s deep system access, persistent memory, and reliance on third-party skills make it an attractive target for supply chain attacks, reinforcing the need for isolation, restricted permissions, and careful vetting before deployment.

The rapid growth and decentralized nature of OpenClaw deployments mirror trends in unmanaged AI infrastructure, creating a large, publicly exposed attack surface that can be exploited by adversaries at scale. The combination of persistent memory, tool access, and remote capabilities makes these AI assistants particularly vulnerable to automated malware campaigns.