AI is being used in our everyday lives. With LLMs dominating every area, from work, school assignments, getting help with grocery shopping, calculating taxes, or just being a personal assistant, it stores and transmits a lot of info online. Prompt hackers know that LLMs are not safe or secure by design.

And it’s their chance to take advantage by hijacking all that sensitive information. One prompt is all it takes to steer AI in the wrong direction and give out your secrets by accident. In this guide, we will explore what prompt hacking is. You’ll know how it works, how to protect against it, and more below.

.png)

What Is Prompt Hacking?

Prompt hacking is the deliberate manipulation of AI language models through carefully crafted inputs designed to override security controls or extract unintended responses. These evasion attacks exploit the inability of large language models (LLMs) to distinguish between legitimate instructions and malicious commands in natural language processing, taking advantage of the model's tendency to treat all text with equal authority.

Attackers gain access through multiple entry points, like customer support chatbots, content analyzers, or compromised third-party data feeds your AI ingests. While prompt injection attacks pose theoretical risks to trained models, modern chatbots can implement guardrails to prevent embedded instructions from overriding system-level security.

Successful attacks can result in compromised proprietary systems, exposed sensitive data, unauthorized actions through connected applications, and significant reputational damage when safety controls are circumvented.

Why Prompt Hacking Attacks Are a Problem

Prompt hacking bypasses traditional security defenses by exploiting AI's inherent trust in input data, creating an entirely new attack surface that conventional tools can't protect. Unlike code-based vulnerabilities, these adversarial machine learning attacks manipulate deep neural networks at the semantic level:

- Business Impact: Attacks operate where AI processes language, bypassing firewalls to expose proprietary training data or trigger unauthorized actions without leaving conventional signatures.

- Expanding Attack Surface: Each AI deployment creates new entry points, especially when systems connect to backend infrastructure.

- Detection Challenges: Malicious prompts blend with legitimate requests, making pattern-matching detection inadequate compared to recognizable SQL signatures.

- Evolving Techniques: From simple "ignore previous instructions" commands to sophisticated poisoning attacks, new jailbreak methods emerge weekly.

- Compliance Violations: When AI systems process regulated data, prompt attacks may constitute a data breach under GDPR or HIPAA.

This emerging threat requires security teams to develop expertise spanning both traditional cybersecurity and defense against adversarial attacks for machine learning models.

4 Prompt Hacking Attack Categories

Real-time alert triage demands quick decisions. This matrix shows the different types of adversarial attack categories that prompt hacking can fall under:

| Attack Type | Goal | Technique | Detection Signals |

| Goal Hijacking | Override intended task flow | "Ignore all previous instructions and..." | Sudden context shifts, override phrases |

| Guardrail Bypass | Evade safety filters | Role-playing jailbreaks ("Act as unfiltered assistant") | Prohibited content after benign queries |

| Information Leakage | Extract system prompts or sensitive data | Query chains requesting internal instructions | Responses echoing configuration or secrets |

| Infrastructure Attack | Manipulate connected systems | Indirect injection triggering shell commands | Unexpected API calls or file access |

These categories often blend together. For example, an attack might extract secrets, then trigger API calls that compromise production systems, similar to how black box attacks work in computer vision when creating adversarial examples that make driving cars misinterpret a stop sign.

How to Prevent Prompt Hacking Attacks

Protecting AI systems from prompt hacking requires defense-in-depth rather than a single solution. Here are six protective measures that form a robust shield:

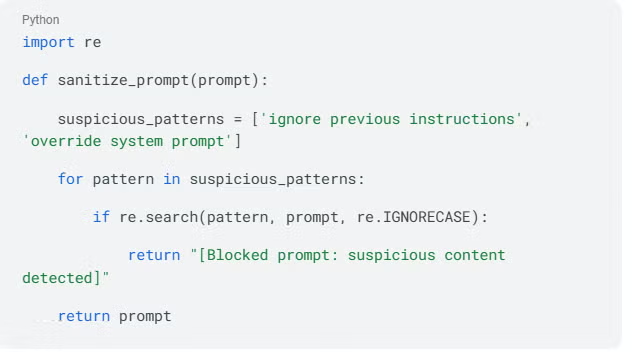

1. Validate and Sanitize Inputs

Before a prompt reaches your model, run it through pattern detection that identifies classic override phrases and suspicious encodings. Implement regex checks for known attack patterns while detecting Unicode homoglyphs that attackers use to evade detection.

Here's a simple Python function that implements basic pattern-based prompt filtering to catch common attack phrases:

Adversarial training with malicious examples can strengthen your filters while keeping false-positive rates low.

Adversarial training with malicious examples can strengthen your filters while keeping false-positive rates low.

2. Parameterize System Instructions

Clearly separate user text from system instructions using explicit delimiters. Wrap user inputs in markers (e.g., <|user|>{input}<|end|>) to prevent the model from confusing untrusted content with privileged commands.

Defensive distillation techniques can help machine learning models resist manipulation of input data.

3. Filter and Post-Process Outputs

Run every model response through multiple safety layers before delivery. Implement toxicity classifiers and policy engines that can refuse content violating standards. Add stateful checks that monitor for "guardrail probing" where white box attackers gradually escalate privileges.

4. Isolate LLM Environments

Host language models in dedicated containers, completely separated from core data stores. Route all API calls through tightly scoped proxies that restrict access to external resources. This containment ensures that even if an attacker manipulates the model into attempting a shell command or data exfiltration, the sandbox prevents execution.

5. Implement Least Privilege Controls

Grant LLMs only minimal credentials—read-only access to knowledge bases and no administrative permissions. Use short-lived API keys and fine-grained RBAC to ensure successful prompt attacks cannot escalate to higher-value systems.

6. Monitor Continuously for Anomalies

Treat every LLM interaction as a security event by logging prompts and responses in immutable storage. Feed this telemetry into your existing security monitoring systems to identify unusual patterns. The SentinelOne Singularity Platform exemplifies this approach by automating detection and reducing alert volume by 88%.

Singularity™ Platform

Elevate your security posture with real-time detection, machine-speed response, and total visibility of your entire digital environment.

Get a DemoDetection and Recovery Strategies

Store prompts, user identifiers, timestamps, and model responses in secure storage to replay sessions and trace how malicious instructions slipped through. Feed logs into your SIEM and deploy rules that surface attack signatures:

- Obfuscated payloads: Large Base64 strings often signal attempts to smuggle hidden instructions

- Context overrides: Phrases like "ignore all previous instructions"

- Anomalous volume: Sudden spikes in submissions from a single API key

When an attack is confirmed, isolate breached components, revoke exposed API keys, and disable downstream connectors. Purge any injected context from caches, patch vulnerable system prompts, and fine-tune filters to block discovered payload variants. Document every step in an incident report template.

Incident Response & Recovery Playbook

Even with robust defenses, a determined attacker may still slip through your guardrails. When that happens, you need a playbook that moves as fast as the exploit.

- Start with identification by surfacing the malicious prompt. Continuous logging of every request and response lets you trace the exact instruction chain the model followed. Pattern matching for tell-tale strings like "ignore previous instructions" or base64 blobs helps you flag suspicious activity in near-real time.

- Once you confirm an attack, move to containment by isolating the breached components. Spin up fresh sandbox instances, revoke API keys the prompt may have exposed, and throttle the user session. If your LLM is embedded in an agent workflow, disable downstream connectors until you can verify they weren't manipulated.

- Next, execute eradication by purging any injected context from caches or "memory" features, patching vulnerable system prompts, and fine-tuning filters to block the discovered payload variants. General cybersecurity practices recommend updating instruction templates after a breach as part of defense-in-depth, which may help reduce the risk of repeated exploits.

- Lastly, finish with lessons learned through a cross-functional debrief and a rollback test involving security engineers, machine learning specialists, and compliance leads. Industry experts recommend keeping a "human in the loop" to review post-incident model behavior and approve restored prompts.

Document every step in an incident report template that captures the malicious prompt, impact scope, timeline, and remedial actions. Security teams frequently pair the debrief with these tests to ensure infrastructure can be reverted instantly if a prompt ever triggers destructive changes again.

Stop Attacks Before They Start

Prompt hacking turns conversational interfaces into attack vectors that bypass traditional security. Similar to how computer vision systems can be fooled into misclassifying a stop sign, language models can be manipulated through carefully crafted inputs.

Defense requires multiple approaches: input validation, output filtering, environment isolation, continuous monitoring, and adversarial training. Quick wins like parameterized prompts raise the bar immediately, while deeper investments in sandboxing create resilient systems.

Treat prompt security as an ongoing discipline, not a one-time implementation. Attackers iterate rapidly, creating new techniques to evade detection. Organizations that embed security reviews into AI development lifecycles will stay ahead of adversaries who view every conversation as a potential compromise.

The frameworks outlined here give you the foundation to build protection before the next cleverly crafted sentence brings down your defenses.

Prompt Hacking FAQs

You're defending against linguistic manipulation, not malicious code. Attackers exploit the LLM's tendency to treat every piece of text as equally authoritative.

Yes. Private models face the same vulnerabilities. An insider or compromised data source can inject hidden instructions that the model follows without question.

Prompt-based data exfiltration creates the same compliance liabilities as any other breach. A single leaked prompt can trigger GDPR, HIPAA, or similar penalties.

Review filters, logs, and system prompts at least monthly or after any model update. Threat actors iterate quickly, and AI-assisted attacks accelerate constantly.

Engineering literacy, cross-modal threat analysis, and continuous red-teaming represent core competencies for AI security roles.