Tracking threat actor infrastructure has become increasingly complex. Modern adversaries rotate domains, reuse hosting, and replicate infrastructure templates across operations, making it difficult to connect isolated indicators to broader activity. Checking an IP, a domain, or a certificate in isolation can often return little of value when adversaries hide behind short-lived domains and churned TLS certificates.

As a result, analysts can struggle to see how infrastructure evolves over time or to identify shared traits like favicon hashes, header patterns, or registration overlaps that can link related assets.

To help address this, SentinelLABS is sharing a Synapse Rapid Power-Up for Validin. Developed in-house by SentinelLABS engineers, the sentinelone-validin power-up provides commands to query for and model DNS records, HTTP crawl data, TLS certificates, and WHOIS information, enabling analysts to quickly search, pivot through, and investigate network infrastructure for time-aware, cross-source analysis.

In this post, we explore two real-world case studies to demonstrate how an analyst can use the power-up to discover and expand their knowledge of threats.

Case Study 1 | LaundryBear APT: Body Hash Pivots

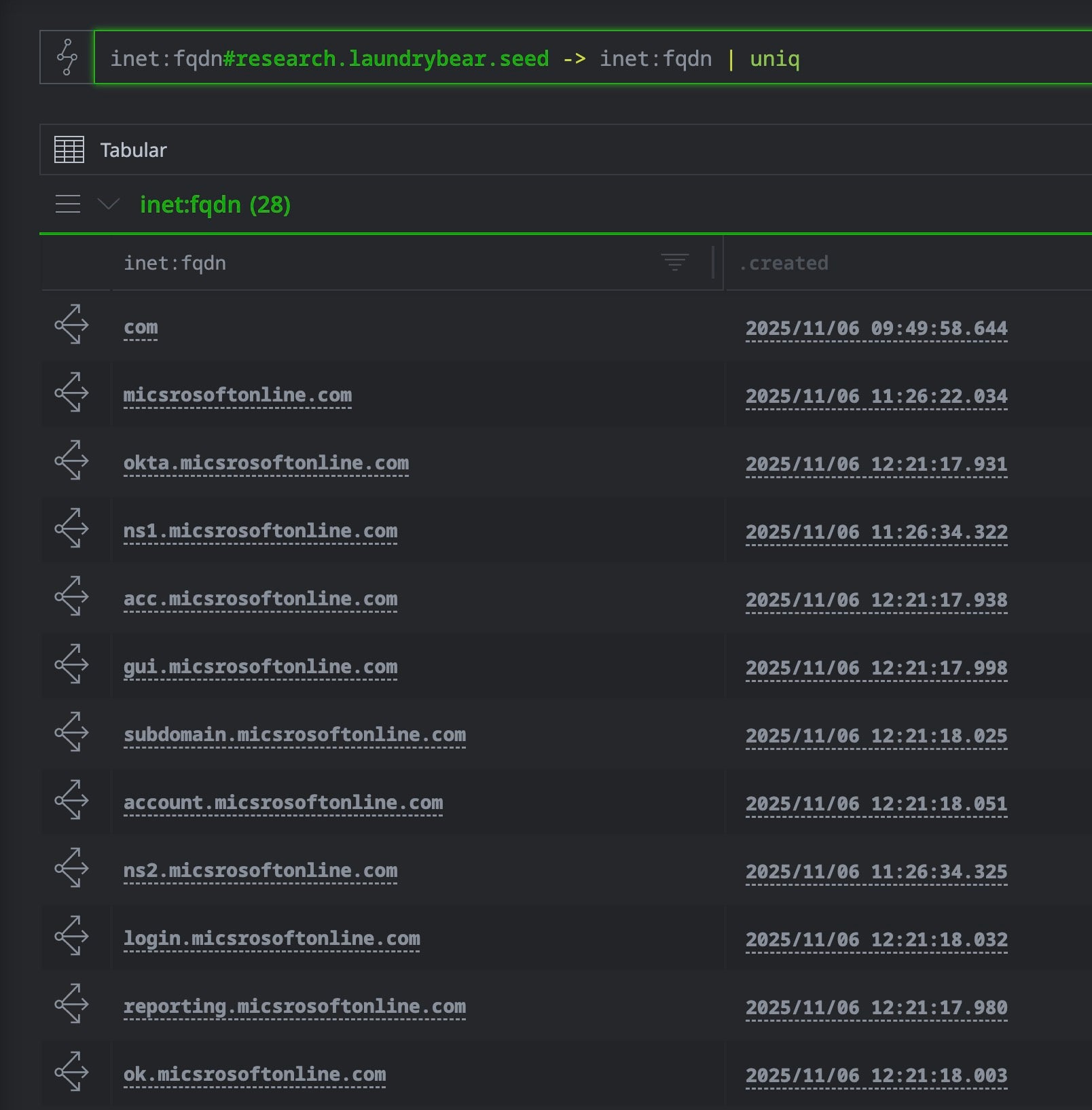

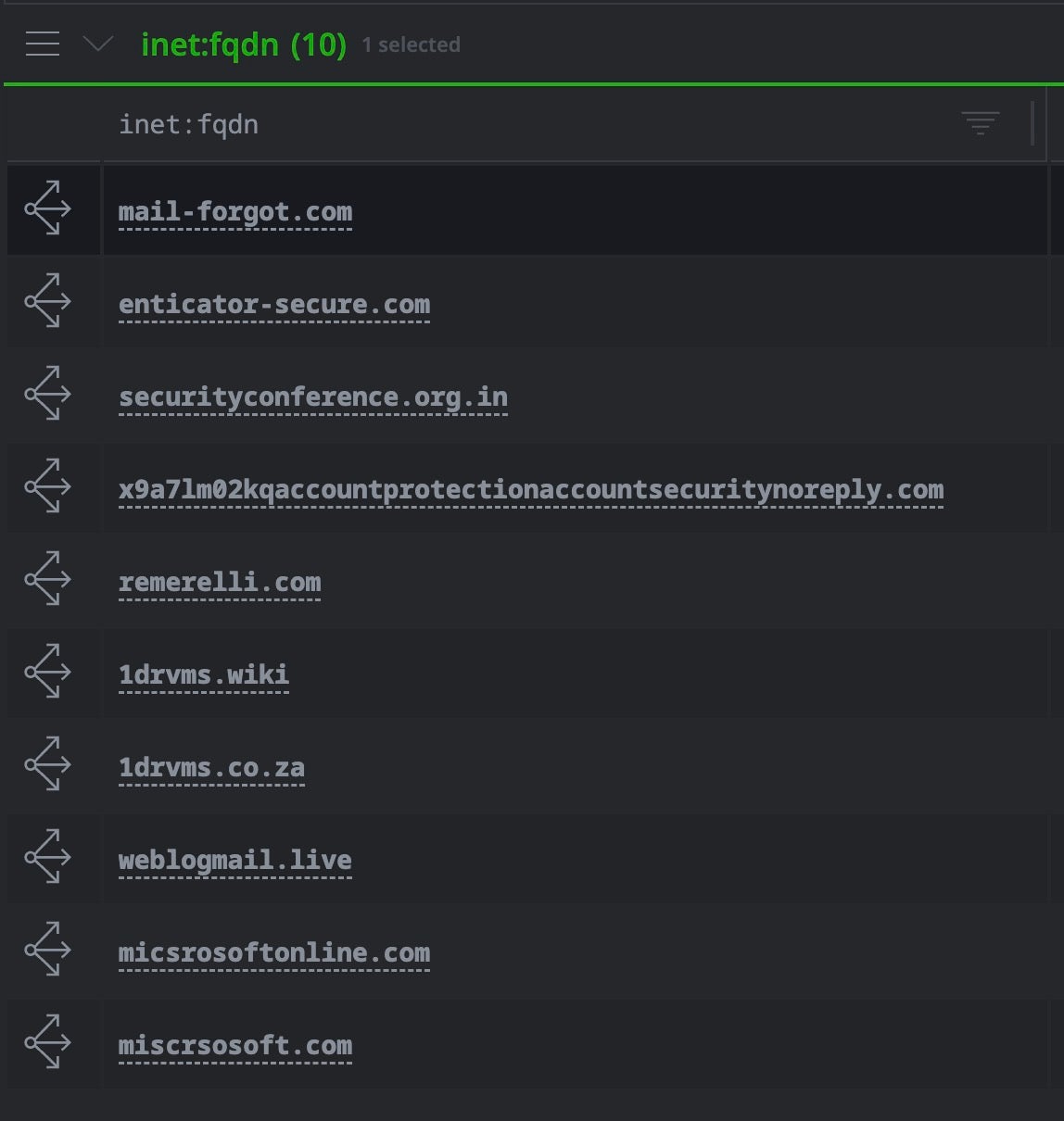

When Microsoft published indicators for LaundryBear (aka Void Blizzard), a Russian APT targeting NATO and Ukraine, the threat report included just three domains. Using the power-up’s HTTP body hash pivots, we can expand this seed set to over 30 related domains, revealing the full scope of the campaign’s infrastructure.

Initial Enrichment of Known Indicators

We begin with the s1.validin.enrich command, which serves as a unified entry point for all Validin data sources. Rather than running separate commands for DNS history, HTTP crawls, certificates, and WHOIS records, this single command executes comprehensive enrichment across all four datasets simultaneously.

The resulting node graph immediately reveals initial pivot opportunities—shared nameservers in DNS records, certificate SAN relationships, registration timing patterns, and HTTP fingerprint clusters—providing multiple investigative paths forward.

This rapid reconnaissance phase surfaces the most promising leads before committing to expensive deep pivots, helping analysts choose the optimal next step based on what patterns emerge from the enriched graph.

// Tag the published spear-phishing domain [inet:fqdn=<phising domain> +#research.laundrybear.seed] // enrich the initial domain inet:fqdn#research.laundrybear.seed | s1.validin.enrich --wildcard // display all unique fqdns related to this seed inet:fqdn#research.laundrybear.seed -> inet:fqdn | uniq

Pivoting from Crawlr Data

The Validin crawler (Crawlr) is a purpose-built, large-scale web crawler operated by Validin that continuously scans internet infrastructure. Querying Validin through the sentinelone-validin power-up provides access to pre-existing crawl observations, allowing instant analysis without active scanning.

The crawler data for our seed domains was already downloaded during the initial s1.validin.enrich command. This created inet:http:request nodes in Synapse containing multiple HTTP fingerprints stored as custom properties: body hashes (SHA1), favicon hashes (MD5), certificate fingerprints, banner hashes, and CSS class hashes.

Each fingerprint type serves as a pivot point: body hashes reveal identical content, favicon hashes expose shared branding, certificate fingerprints uncover SSL infrastructure, and class hashes detect configuration patterns. Together, these pivots transform the initial three seed domains into a comprehensive infrastructure map.

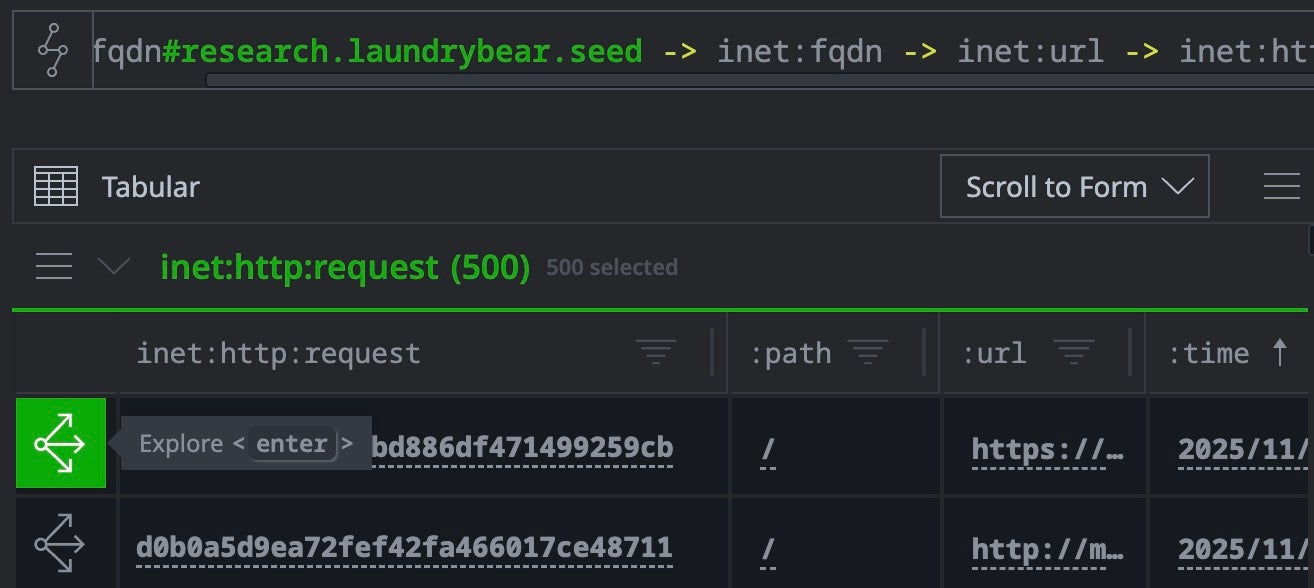

The query starts with our tagged seed domains, pivots to any related FQDNs discovered during enrichment, follows URL relationships, and lands on the actual HTTP request nodes captured by Validin’s crawler. Each inet:http:request node serves as a rich pivot point connecting to multiple content fingerprints and infrastructure properties.

// List all http requests to all the subdomains inet:fqdn#research.laundrybear.seed -> inet:fqdn -> inet:url -> inet:http:request

HTTP Pivot Discovery

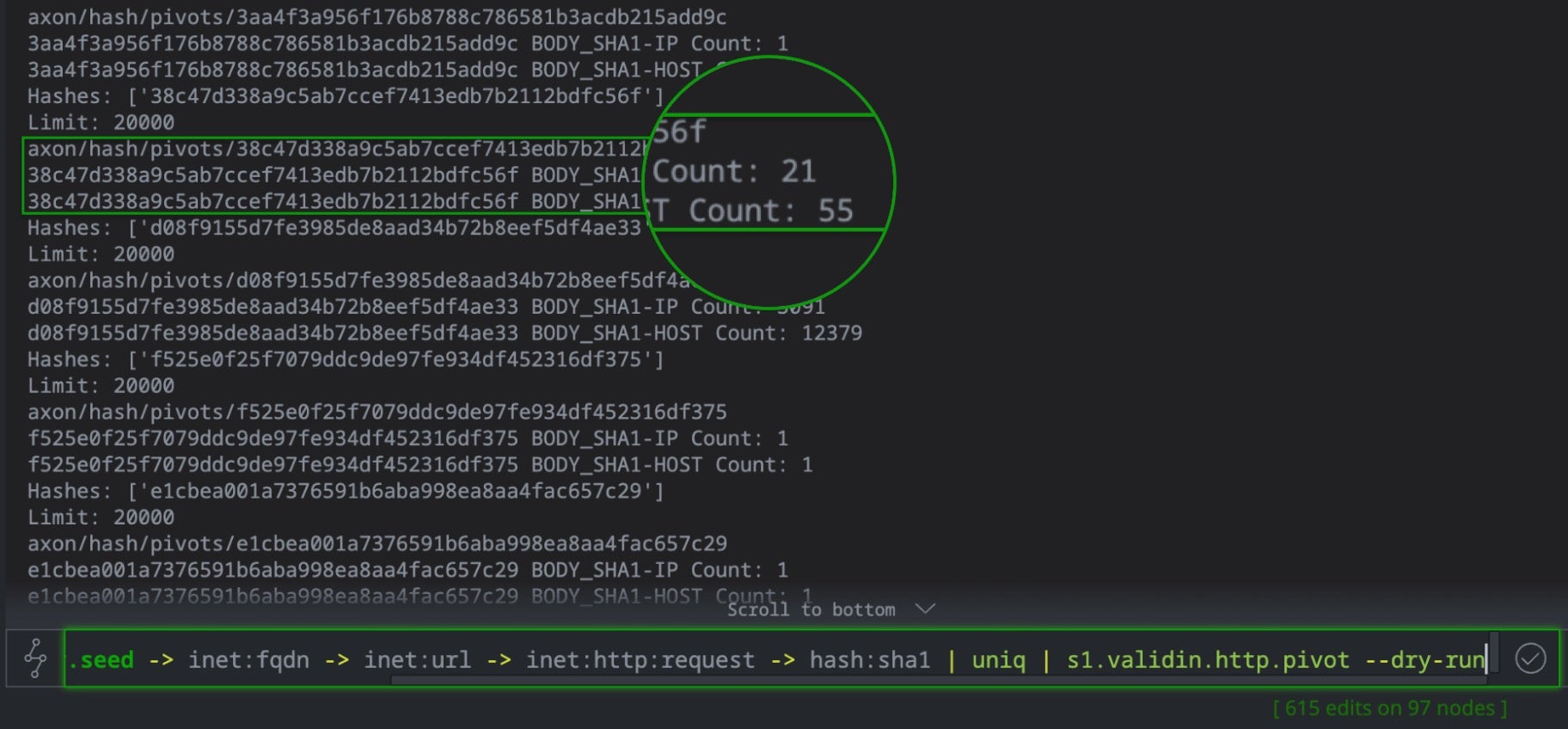

Validin’s Laundry Bear Infrastructure analysis identified synchronized HTTP responses across threat actor infrastructure. We can reach the same discovery using Storm’s HTTP pivot with statistical output.

When executing inet:http:request, the command prints detailed occurrence statistics to the Storm console: how many times each HTTP fingerprint from the input http response (body SHA1, favicon MD5, banner hashes, certificate fingerprints, header patterns) appears in Validin’s database. For example, a body hash might appear on 21 IPs and 55 hostnames, a favicon hash might match 18 IPs and 52 hostnames, while a certificate fingerprint on 15 IPs and 48 hostnames.

The size of these counts is the critical indicator. High counts in the thousands indicate benign infrastructure like CDNs and can be dismissed from consideration. Very low counts (1-5) suggest isolated infrastructure. However, when a particular hash appears in the Validin crawler database with a moderate count (15-55 hosts with the same hash fingerprint in this case), it can indicate synchronized infrastructure provisioning: the exact pattern that characterized Laundry Bear’s coordinated buildout. In short, the --dry-run flag transforms expensive full-graph pivots into rapid statistical reconnaissance.

// Collect all the hash:sha1 indicators gathered in the previous step and perform a "dry run" with the s1.validin http.pivot to check statistics inet:fqdn#research.laundrybear.seed -> inet:fqdn -> inet:url -> inet:http:request -> hash:sha1 | uniq | s1.validin.http.pivot --dry-run

Materializing and Summarizing Pivots in Synapse

After identifying promising body hash pivots through --dry-run statistics, we need to materialize the actual infrastructure and summarize the results. Consider this command:

// materialize and summarize apex domains form single pivot hash:sha1=38c47d338a9c5ab7ccef7413edb7b2112bdfc56f | s1.validin.http.pivot --yield // pivot to apex domains | +inet:fqdn -> inet:fqdn +:iszone=true | uniq

Omitting the --dry-run flag is critical here; removing the flag allows the Storm command to create and persist all discovered nodes in the Cortex. The full infrastructure graph (HTTP requests, certificates, DNS records) is ingested, making it available for future pivots and correlation with other intelligence sources. The final filtering and deduplication produces a concise summary: “This body hash appears across N distinct apex domains”, transforming raw occurrence statistics into actionable threat intelligence.

Results:

- 10 apex domains with identical body hash visible immediately (JavaScript redirect)

- Most are Microsoft/corporate service typosquats used for credential phishing

- 22 IP address shared by multiple domains, revealing hosting infrastructure

Note that the query here identifies ten domains in total, two more than reported in Validin’s analysis of the LaundryBear infrastructure. The extra context here comes from our additional use of the official synapse-psl power-up, which ingests and maintains the Mozilla Public Suffix List and ensures that inet:fqdn:zone correctly identifies true organizational boundaries.

Tagging Discovered Infrastructure

Once we’ve identified related infrastructure through hash pivots, we need to tag these findings for tracking and future analysis. Storm provides inline tagging capabilities that mark nodes during the pivot workflow by appending this snippet at the end of a query that produces output to be tagged.

// Tagging ... [+#research.laundrybear.infra]

This workflow expanded three published indicators to 55 domains and 21 IP addresses through body hash pivots, revealing the campaign’s infrastructure scope.

Case Study 2 | FreeDrain: Large-Scale Pivot Operations

FreeDrain, an industrial-scale cryptocurrency phishing network, used 38,000+ lure pages across gitbook.io, webflow.io, and github.io. The campaign templated infrastructure with reused favicons, redirector domains, and phishing pages hosted on Azure and AWS S3—an ideal scenario for demonstrating the power-up’s capabilities.

Discovering Bulk Registration Patterns

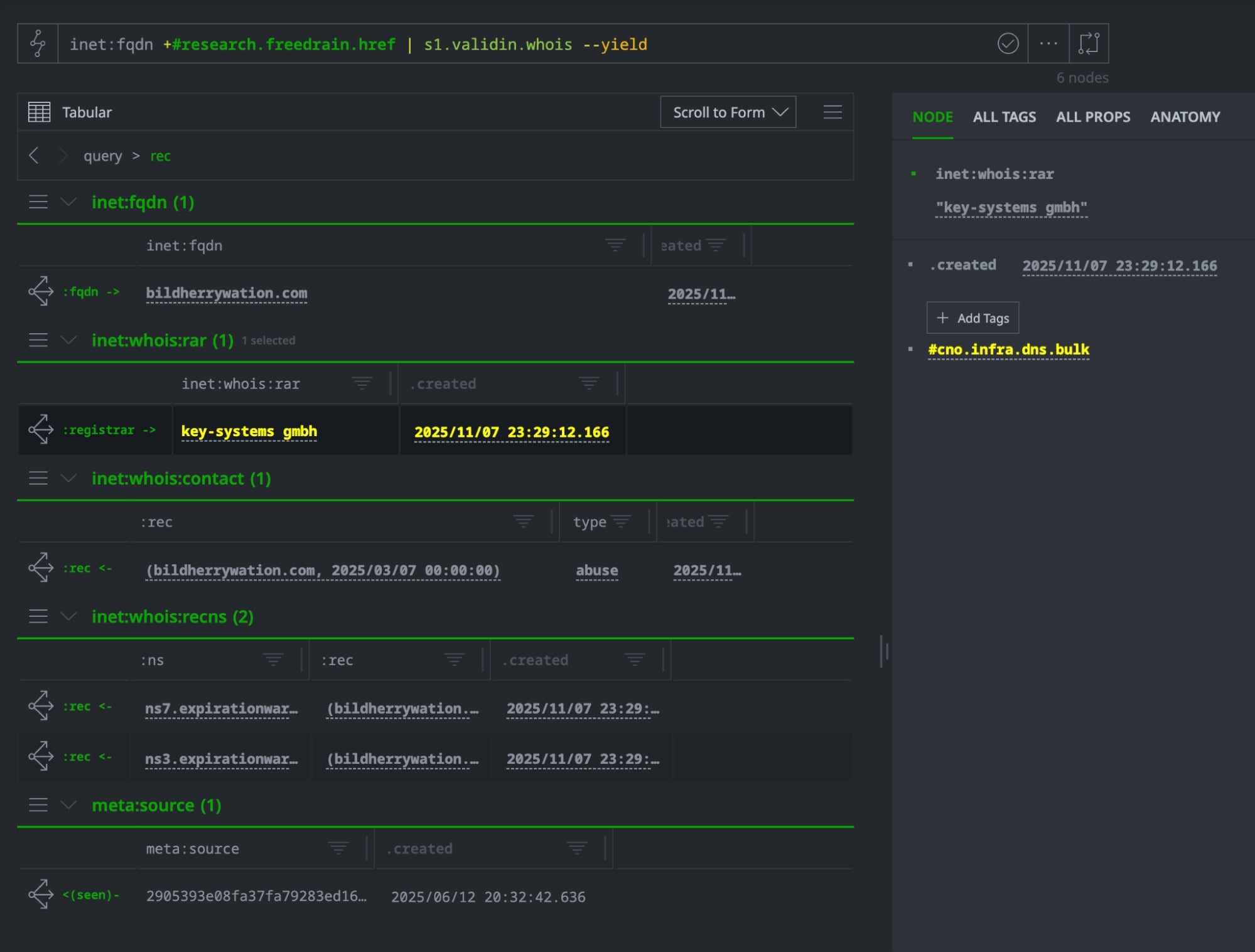

During the initial investigation, SentinelLABS identified a set of redirect domains used by FreeDrain operators to funnel victims from legitimate hosting platforms to attacker-controlled phishing infrastructure. These domains were tagged as #research.freedrain.href in our Cortex. To understand the operational infrastructure behind these redirectors in Synapse, we can enrich them through Validin’s WHOIS data:

// Enrich the domains with whois data inet:fqdn#research.freedrain.href | s1.validin.whois

This enrichment ingests historical WHOIS records for each redirect domain, creating inet:whois:rec nodes with registration dates, expiration information, and critically, registrar relationships.

When exploring the enriched graph in Synapse’s UI, several nodes immediately stand out (highlighted in yellow in our default workspace setup), indicating CNO (Computer Network Operations) tags from previous investigations.

The highlighting reveals that the shared registrar is already tagged as #cno.infra.dns.bulk, a designation in our environment for DNS registrars known to facilitate bulk domain registrations used in threat operations.

This isn’t a coincidental infrastructure overlap: we’ve immediately connected FreeDrain to a known malicious service provider that’s appeared in previous campaigns. The pre-existing #cno. tag transforms this from a simple infrastructure observation into a high-confidence attribution signal: FreeDrain operators are using the same operational resources as other documented threat actors.

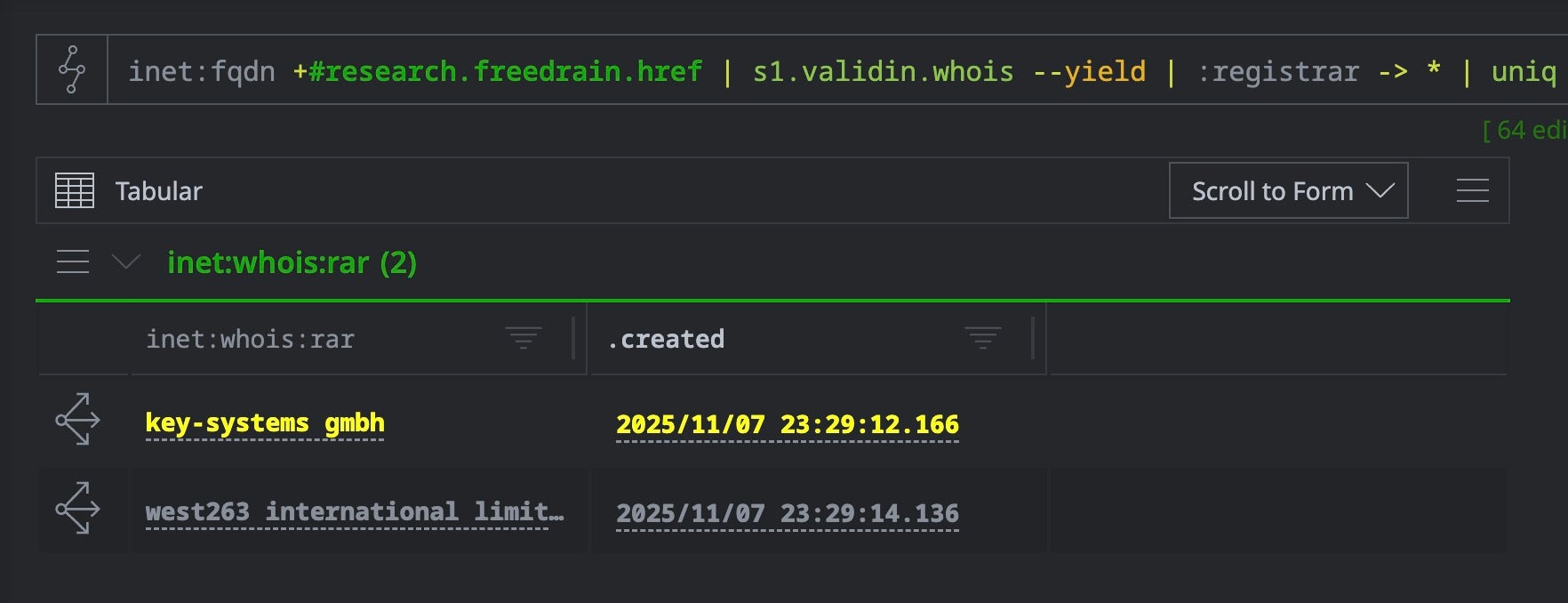

Before pivoting broadly, we can survey the registrar landscape within our FreeDrain redirect domain pool to understand infrastructure diversity:

inet:fqdn#research.freedrain.href | s1.validin.whois --yield | :registrar -> * | uniq

To isolate the domains registered through this bulk provider, we can filter directly by registrar name:

inet:fqdn#research.freedrain.href -> inet:whois:rec | +:registrar='<registrar name>'

The FreeDrain case demonstrates how Validin’s WHOIS enrichment can transform large-scale phishing investigations. Starting with a handful of tagged redirect domains, a single enrichment command and two pivot queries exposed the full operational infrastructure—hundreds of domains provisioned through bulk registrars.

This is possible due to Synapse’s ability to correlate new campaign data with historical intelligence: the pre-existing #cno.infra.dns.bulk tags immediately identified FreeDrain’s infrastructure as part of a known malicious service ecosystem, providing attribution context that wouldn’t exist in isolated analysis.

Passive DNS

Domain Enrichments

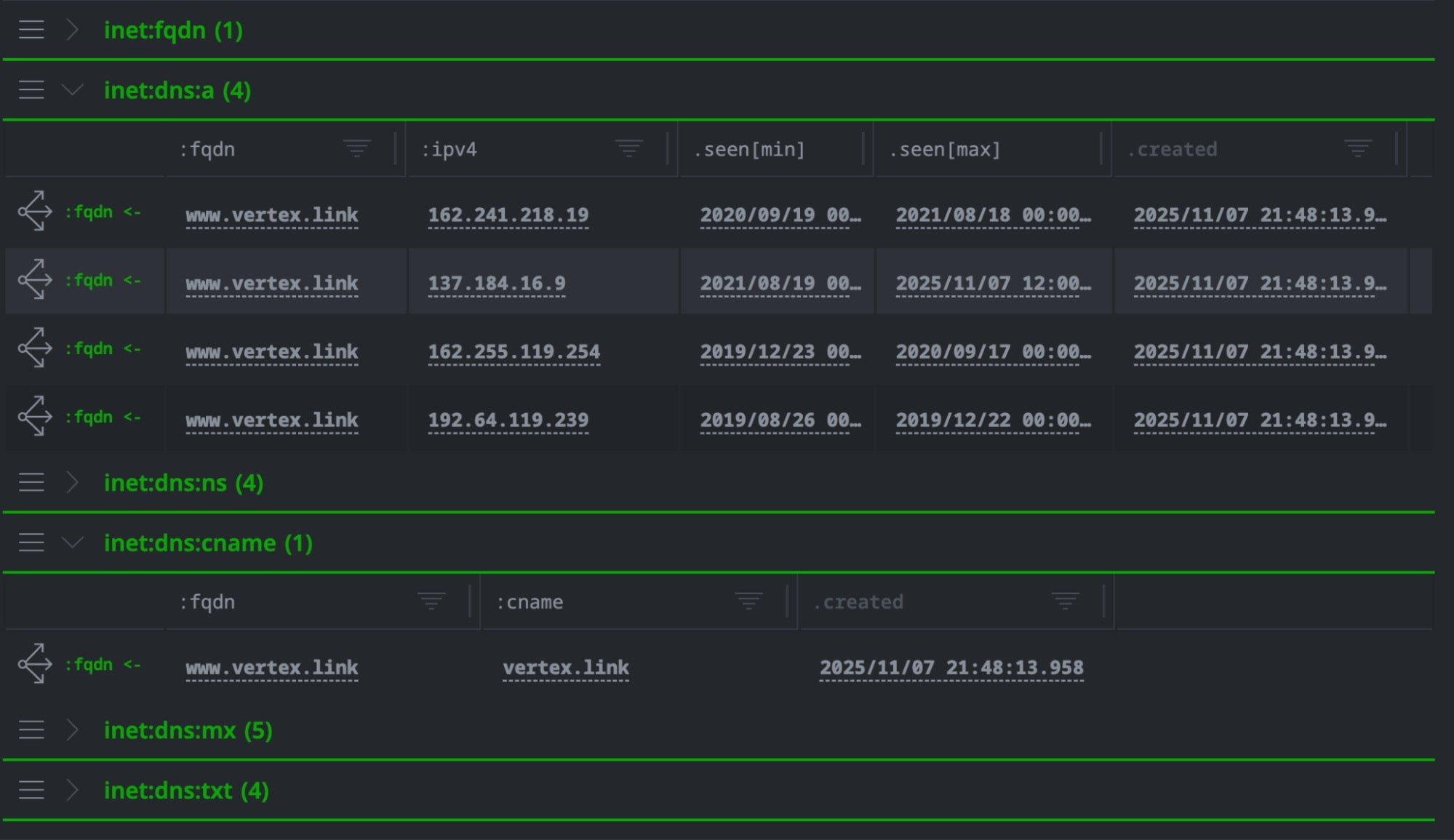

The sentinelone-validin power-up allows us to enrich a domain and pivot to find details, not only about its current registration but the history of the records. With the –wildcard option, Validin returns the DNS records for all related subdomains.

// Enrich the domain with its registration information, and also include subdomains [inet:fqdn=<target domain>] | s1.validin.dns --wildcard

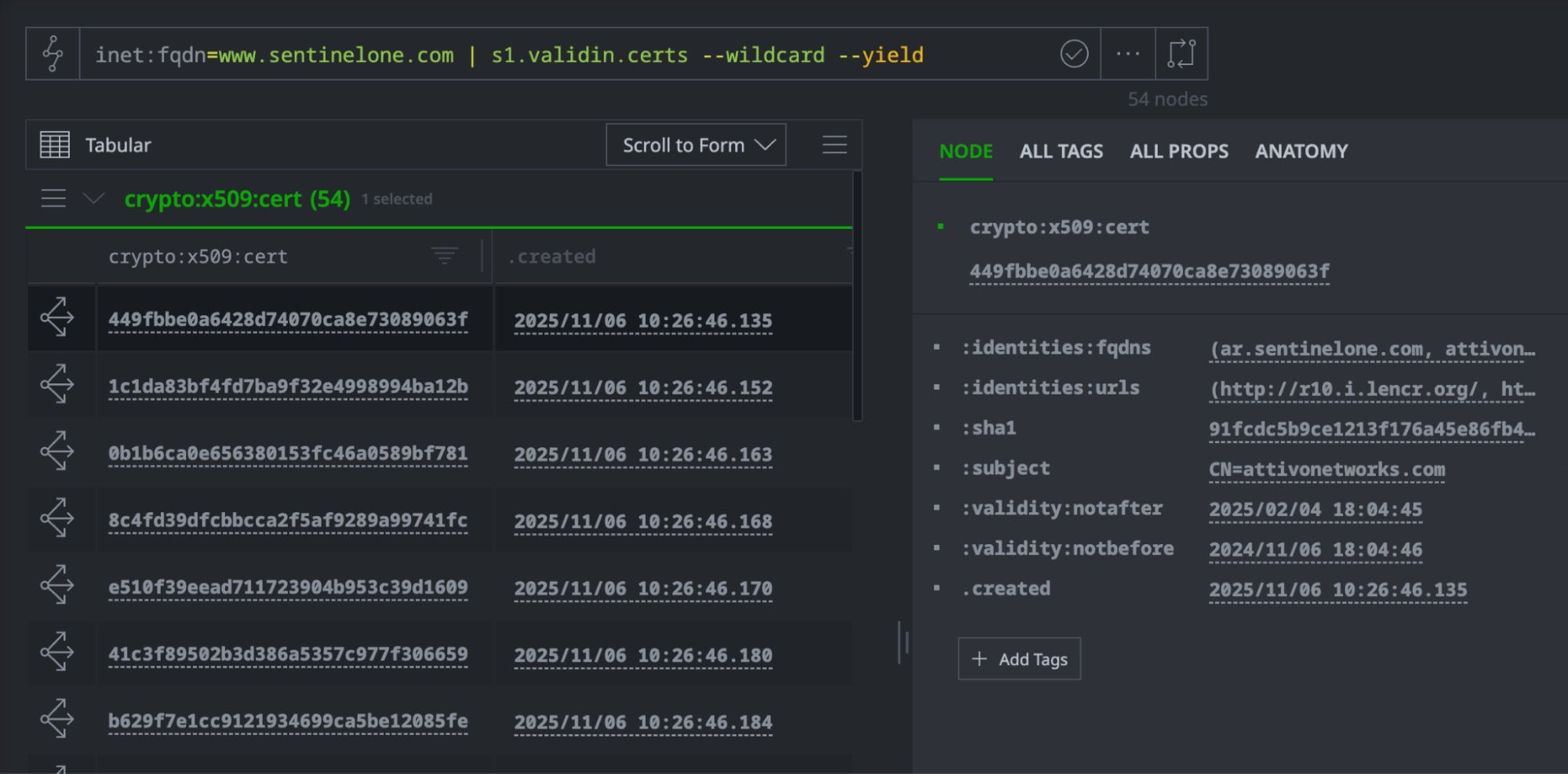

Certificate Transparency

Certificates often include multiple domains in Subject Alternative Name (SAN) fields, revealing infrastructure relationships. The following query can help us to quickly find all certificates issued for a domain and its subdomains:

// Find all domains that share certificates with our target inet:fqdn=<target domain> | s1.validin.certs --wildcard --yield

WHOIS

The sentinelone-validin power-up is able to ingest historical WHOIS registration data, creating several node types that enable temporal analysis of infrastructure:

inet:whois:rec– WHOIS records with registration dates (:created), expiration (:expires), last update (:updated), and registrar informationinet:whois:rar– Registrar entities referenced by WHOIS recordsinet:whois:recns– Nameserver associations for each domain registrationinet:whois:contact– Contact records for domain roles (registrant, admin, tech, billing) including name, organization, email, phone, and postal address details

Multiple historical records are created per domain, allowing analysts to track changes in infrastructure over time. For example, domains registered on the same day can indicate batch infrastructure provisioning. A query such as:

inet:fqdn=<target domain> | s1.validin.whois

returns all relevant WHOIS records for the target domain, allowing the analyst to pivot to other domains, registrars, or contacts that share temporal or structural relations.

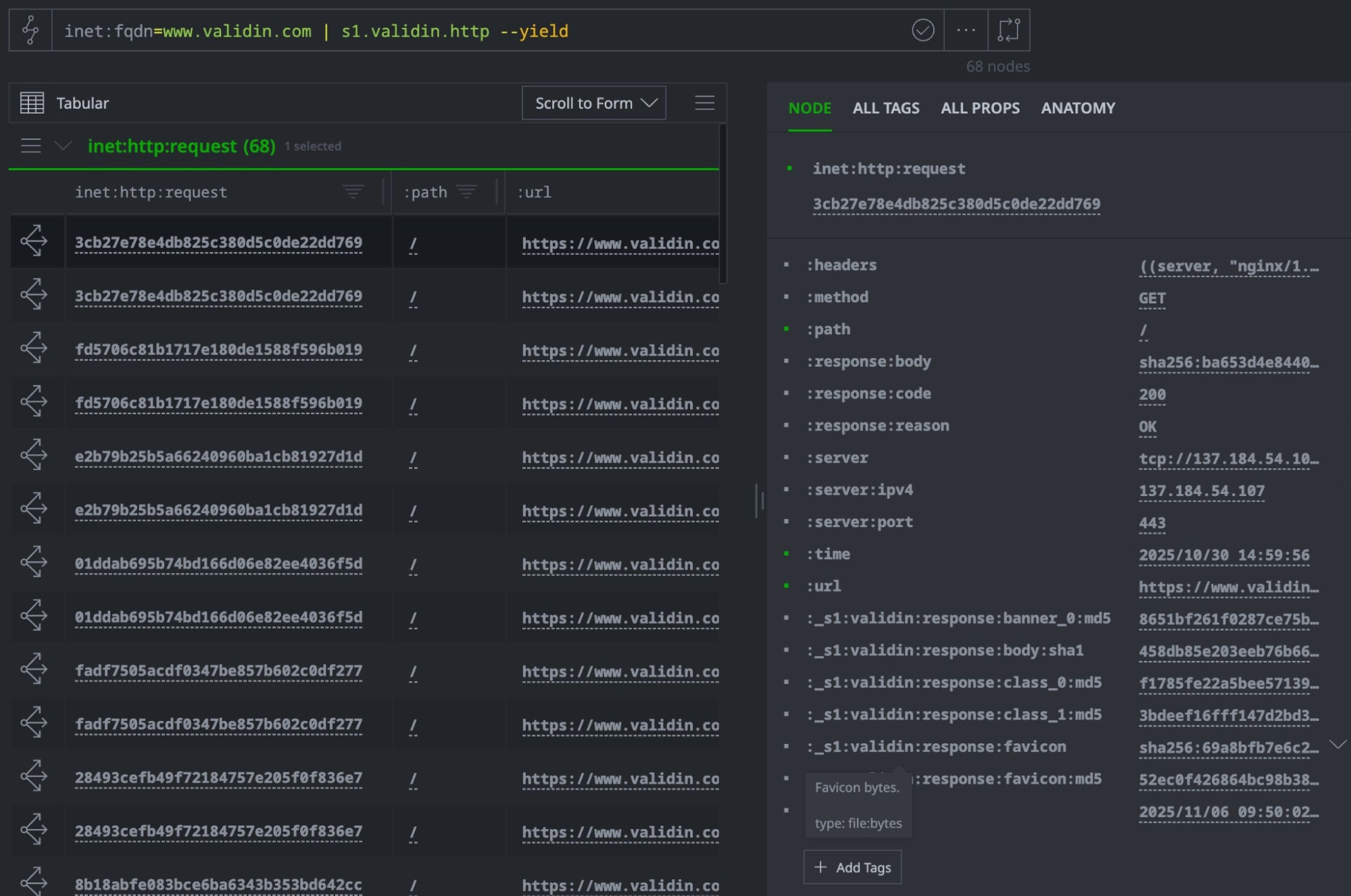

HTTP Crawler

One of the initial challenges we sought to address with the sentinelone-validin power-up was how to improve visibility into infrastructure that exists even as domains and hosting change. Validin’s crawler collects fingerprints such as page content, headers and favicons that persist across domain rotation and infrastructure churn. Leveraging these fingerprints allows analysts to identify patterns and connections that might otherwise be overlooked.

Host Response Fingerprinting

Validin’s HTTP crawler captures and fingerprints multiple aspects of web server responses—favicons, body content, HTTP headers, TLS banners, and HTML structure. The power-up parses these fingerprints and models them as pivotable properties in Synapse, enabling infrastructure clustering through content similarity.

inet:fqdn=<target domain> | s1.validin.http --yield

The crawler extracts:

- Favicon hashes (MD5) – Often forgotten when templating infrastructure

- Body hashes (SHA1) – Detect identical or templated page content

- Banner hashes – Fingerprint server software and configuration

- Header hashes – Identify shared backend infrastructure

- HTML class hashes – Cluster pages with similar structure

Downloading and Parsing Content

The power-up includes a built-in download capability that retrieves actual file content for deeper analysis. The s1.validin.download command fetches HTTP response bodies, TLS certificates, and favicon images from Validin’s storage, creating file:bytes nodes in the Cortex.

Combined with Synapse’s fileparser.parse, this enables the extraction of embedded indicators such as URLs, email addresses, file hashes, IP addresses, and other IOCs hidden in page content or certificate metadata:

In Synapse, inet:http:request is a GUID node representing a unique HTTP request event in the hypergraph. Each GUID node has a deterministic identifier usually derived from the request’s properties, enabling implicit deduplication and efficient correlation of network artifacts across the graph.

// Download and parse HTTP responses, certificates, and favicons inet:http:request=| s1.validin.download --yield | fileparser.parse

Hash-Based Pivoting

Traditional graph-based investigation requires loading all data into Synapse first, then querying relationships within your local graph. This works well for known datasets but becomes expensive when exploring unknown infrastructure: an analyst may ingest thousands of nodes only to discover they represent CDN infrastructure, shared hosting, or other benign patterns.

The HTTP pivot capability offers an alternative workflow: querying hashes directly via Validin’s API before loading data into Synapse. This enables selective enrichment, allowing the analyst to explore and evaluate potential pivot paths externally before committing to graph expansion.

// Pivot from a favicon hash to find related domains hash:md5=| s1.validin.http.pivot --category FAVICON_HASH // Pivot from an HTTP request node's embedded hashes inet:http:request= | s1.validin.http.pivot --yield // Discover related infrastructure via body hash hash:sha1= | s1.validin.http.pivot --first-seen 2024/01/01

This approach provides flexibility: assess pivot scope first, then selectively load relevant data.The --dry-run flag shows result counts without creating nodes, letting the analyst preview results before ingestion.

The HTTP pivot command supports multiple content fingerprints:

- FAVICON_HASH – MD5 of favicon images

- BODY_SHA1 – SHA1 of HTTP response bodies

- BANNER_0_HASH – First banner hash

- CLASS_0_HASH / CLASS_1_HASH – Content classification hashes

- CERT_FINGERPRINT / CERT_FINGERPRINT_SHA256 – Certificate fingerprints

- HEADER_HASH – HTTP header pattern hashes

Multi-Source Comprehensive Enrichment

The sentinelone-validin power-up combines multiple data sources in ways that traditional tools cannot. Using this capability, the analyst can retrieve DNS history, HTTP crawl results, certificates and WHOIS records for a domain, all in one query. For example:

inet:fqdn=<target domain> | s1.validin.enrich

This single command populates the Cortex with a rich node graph, giving the analyst a unified view of the target infrastructure and enabling deeper correlation and pivoting across multiple sources.

Try It Yourself

SentinelLABS has open-sourced the sentinelone-validin power-up so other Synapse users can leverage these capabilities:

// Load and configure pkg.load --path /path/to/s1-validin.yaml s1.validin.setup.apikey// Start investigating inet:fqdn=<target domain> | s1.validin.enrich

Minimal Working Example (Docker Compose)

Before deploying the power-up to a production environment, test the environment locally. It is possible to test the power-up with the open-source version of Vertex Cortex as follows

- Use the compose file at docker-compose.yml to bring up a local Cortex

- Load the sentinelone-validin package

- Open a Storm shell connected to it

# From the repository root docker compose up -d cortex docker compose --profile tools run --rm loadpkg docker compose --profile tools run --rm storm

- The Storm shell connects to cortex and you can run the examples from this post, for example:

inet:fqdn=<target domain> | s1.validin.enrich

Storm Query Tips

- Deduplicate: add

| uniqafter broad pivots. - Bound results: use

--first-seen/--last-seenons1.validin.*commands. - Control fanout: add

| limitduring exploration to keep result sets manageable. - Tag iteratively: tag intermediate sets (e.g.,

+#rep.investigation.2026_1.stage1) to branch workflows and compare clusters.

Conclusion

Using the LaundryBear and FreeDrain campaigns as case studies, we’ve explored how the sentinelone-validin power-up leverages Validin’s multi-source enrichment and HTTP fingerprinting to reveal wider campaign infrastructure within Synapse, from just a handful of indicators.

The integration makes it easier to follow how infrastructure changes over time, trace shared resources across campaigns, and connect what might first appear as isolated indicators. With this richer context available directly in Synapse, analysts can move from collection to understanding with greater speed and confidence in their conclusions.

The SentinelLABS team welcomes feedback and pull requests on the sentinelone-validin GitHub repository to help refine and extend its capabilities for the wider research community.